The goal of this project is reinforce the work on sorting arrays by implementing the quicksort algorithm and to conduct a careful empirical study of the relative performance of sorting algorithms.

Your work will be twofold:

Recall the difference between insertion and selection sorts. Although they share the same basic pattern (repeatedly move an element from the unsorted section to the sorted section), we have

Insertion

Selection

Now, consider the basic pattern of merge sort:

More specifically, merge sort splits the array simplistically (it's the "easy" part). The reuniting is complicated. What if there were a sorting algorithm that had the same basic pattern as merge sort, but stood in analogy to merge sort as selection stands towards insertion? In other words, could there be a recursive, divide-and-conquer, sorting algorithm for which the splitting is the hard part, but leaves an easy or trivial reuniting at the end?

To design such an algorithm, let's take another look at what merge sort does. Since the splitting is trivial, the interesting parts are the recursive sorting and the reuniting. The recursive call sorts the subarrays internally, that is, each subarray is turned into a sorted subarray. The reuniting sorts the subarrays with respect to each other, that is, it moves elements between subarrays, so the entire range is sorted.

To rephrase our earlier question, the analogue to mergesort would be an algorithm that sorts the subarrays with respect to each other before sorting each subarray. Then reuniting them is trivial. The algorithm is called "quicksort," and as its name suggests, it is a very good sorting algorithm.

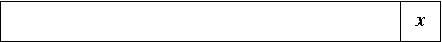

Here's a big picture view of it. Suppose we have an array, as pictured below. We pick one element, x, and delegate it as the "pivot." In many formulations of array-based quicksort, the last element is used as the pivot (though it doesn't need to be the last).

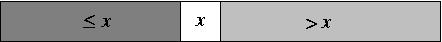

Then we separate the other elements based on whether they are greater than or less than the pivot, placing the pivot in the middle.

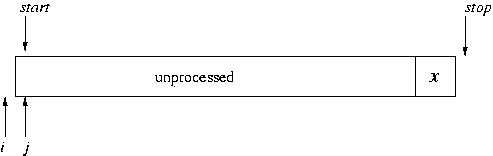

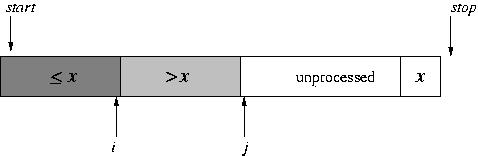

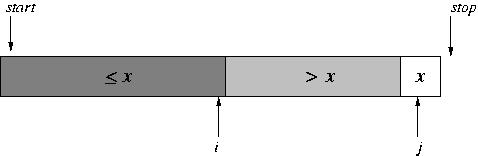

In a closer look, while we do this partitioning of the array, we maintain three portions of the array: the (processed) elements that are less than the pivot, the (processed) elements that are greater than the pivot, and the unprocessed elements. (In a way, the pivot itself constitutes a fourth portion.) The indices i and j mark the boundaries between portions: i is the last position of the portion less than the pivot and j is the first position in the unprocessed portion. Initially, the "less than" and "greater than" portions are empty.

During each step in the partitioning, the element at position j is examined. It is either brought into the "greater than" portion (simply by incrementing j) or brought into the "less than" portion (by doing a swap and incrementing both i and j).

(Think about that very carefully; it's the core and most difficult part of this project. Can you describe this in terms of loop invariants? Use them in writing the actual loop.)

At the end, the unprocessed portion is empty.

All that's left is to move the pivot in between the two portions, using a swap. We're then set to do the recursive calls on each portion.

Make a directory for this course (if you haven't already). Make a new directory for this project. Then copy into it some starter code, which will be similar to the code you started with in lab.

cd 245 mkdir proj1 cd proj1 cp /homes/tvandrun/Public/cs245/proj1/* .

I am giving you a makefile, but

you will need to modify it later in the project.

As with mergesort, there are two functions with quickSort

in their name.

The second function corresponds to the

prototype for quick sort found in sorts.h.

The function quickSort() is written for you,

but it merely sets up a call to quickSortR(),

which does the real work.

The reason for setting it up this way is that

quickSort() is to sort an entire array

and thus takes a reference to that array and the array's size.

quickSortR() on the other hand is a

helper function to sort a portion of an array,

and so it takes a reference to that array plus

a starting index and a stopping index.

You need to finish quickSortR().

As in lab, you are given a program sort_driver

that tests quick sort and other sorting algorithms.

To use it (after you have compiled everything using

make, run

./sort_driver quick

This will test quick sort on a variety of arrays and report on the results.

As we have been talking about in class, the various sorting algorithms are in different "complexity classes" in terms of the their worst case performance. Selection sort is O(n^2). Insertion sort and bubble sort each have best case O(n) and worst case O(n^2). Merge sort is O(n lg n), whereas quicksort is worst case O(n^2), best case O(n lg n). Shell sort is very difficult to analyze. What we would like to to is test how these algorithms behave in practice. To do that, we'll conduct experiments.

You may want to re-read the part of the pre-lab reading for lab 2 that talks about experiments to refresh your memory.

You will need to choose some questions to test and then write a program that sets up experiments and generates data to address those questions. Here are a few examples of questions to ask:

I have provided a library called array_util.

It has functions to fill an array with random values,

to fill an array with 0, to copy the contents from one array to another,

to get the current time, and a few other things.

Use this in setting up your experiments.

See the documentation in array_util.h

for details.

For example of usage,

look at sort_driver.c

as well as experiment.c from

lab 2 as an example (find it at ~tvandrun/Public/cs245/lab2/experiment.c).

Be very careful about timing things. Make sure you time only what you want to time. In the lab 2 experiments, there were pieces of code like

fore = get_time_millis();

selectionSort(copy, sizes[i]);

aft = get_time_millis();

Note that the sorting was the only thing that happened between the two time measurements. Do not include other things like generating arrays, copying arrays, etc.

Be very careful about how you use arrays. Suppose you want to compare how two sorting algorithms run on the same array. Don't do something like the following:

int sample[10000];

long fore, aft, selection_time, quick_time;

/* fill the array with random values. */

random_array(sample, 10000);

/* time selection sort */

fore = get_time_millis();

selectionSort(sample, 10000);

aft = get_time_millis();

selection_time = aft - fore;

/* time quick sort */

fore = get_time_millis();

quickSort(sample, 10000);

aft = get_time_millis();

quick_time = aft - fore;

What's the problem? Quick sort is sorting an already-sorted array! Instead, if you want to test a bunch of algorithms on the same sequence, first make a master version of that sequence and create a copy each time you sort it, like this:

int master[10000];

int copy[10000];

long fore, aft, selection_time, quick_time;

/* fill the array with random values. */

random_array(master, 10000);

/* time selection sort */

copy_array(master, copy, 10000); // fresh copy

fore = get_time_millis();

selectionSort(copy, 10000);

aft = get_time_millis();

selection_time = aft - fore;

/* time quick sort */

copy_array(master, copy, 10000); // fresh copy

fore = get_time_millis();

quickSort(copy, 10000);

aft = get_time_millis();

quick_time = aft - fore;

Note that the copying of the array is outside the section of code that we're timing.

Another hint is that you can have a larger array allocated

that you use.

The n parameter of the

array utility functions and sorting functions indicates how big

of an array to work on, but you can always simply pretend

the array is smaller than it actually is.

If we wanted to run selection sort on arrays of increasing

sizes, we could declare an array of the largest size and use

that same one for smaller sizes:

int sample[1000000];

int sizes[] = {10000, 50000, 100000, 50000, 1000000};

int i;

long fore, aft;

for (i = 0; i < 5; i++)

{

random_array(sample, sizes[i]);

fore = get_time_millis();

quickSort(sample, sizes[i]);

aft = get_time_millis();

printf("At size %d, it took %ld ms\n", sizes[i], aft - fore);

}

Also note that the code to print longs is

%ld.

Write a brief report (about one page for the text) describing your experiment (question(s) and methodology), reporting on the data, and drawing conclusions about the results. Include a graph/chart. LibreOffice (installed on the lab machines) can be used to make a spread sheet of the data and draw a chart.

Turn in your code, results, and write-up by copying them to a turn-in directory I have prepared for you. To turn in a specific file, do

cp (some file) /cslab.all/ubuntu/cs245/turnin/(your user id)/proj1

To turn every file in whatever directory you are currently in, do

cp * /cslab.all/ubuntu/cs245/turnin/(your user id)/proj1

DUE: Wednesday, Sept 23, 2015, 5:00 pm.

Grading for this project will follow this approximate point breakdown (the right to make minor adjustments in point distribution is reserved):